Introduction

Hybrid systems combine hardware and software technologies for the implementation of applications in the areas of multimedia and mixed reality. Our projects consist of components for computational perception of audio and video data streams, intelligent processing by machine learning and other forms of classification, physical interaction with sophisticated hardware prototypes and proper visualization and sonification methods for the blending of hardware and software.

Below, you can find a selection of projects that were developed by staff and students since 2015. Earlier projects, such as the automated video soccer table Vizler can be found on the website of the former Interactive Media Systems group (link to the static snapshot). If you would like to participate in one of the open projects, please go to the list of current topics for student projects. If you have any questions or comments, please send me an e-mail.

Tuvoli Radio Receiver

2014-2019, audio project. An internet-based radio that filters ads and speech. The FM tuner can be configured with the three buttons in the middle of the front panel. One filters ads, the second music, the third speech. Hence, if you like the music of your favorite station yet not the comments of the DJ, press a button and the filtered speech is replaced by silence or music from your USB stick.

Details

- Hard- & Software: Raspberry Pi 3b, FM Chip, Raspian, Python

- Solution: Processing of live radio, frequency transformation, aggregation of Barkhausen bands, outlier filtering, classification by thresholding

- Performance: Speech, music and ads are recognized within one second in at least 98% of all cases.

- Discussion: Alternatively, we tried classic feature engineering (insufficient recognition rate), MFCCs + CNN-based classification (too slow)

- Contributions: Alexander Bayer (electronics), Lukas Graf (CNN approach), Julius Gruber (classic feature engineering approach)

If you would like to borrow the noise dosimeter for testing, please send me an e-mail.

Base Dosimeter

2018-2020, audio project. Fair psychoacoustic measurement of noise in indoor environments. State of the art measurement of noise inside buildings suffers from the unfair aspect that the norm (ISO 226) over-emphasizes frequency bands that are irrelevant: Noise is created by bass sounds, yet the loudness is mostly a function of the (absent) higher bands. The Base Dosimeter compensates this insufficiency by combining a high quality recording circuit with a Raspberry processor that acts as dosimeter and processor of psychoacoustic noise indicators.

Details

- Hard- & Software: Sonarworks microphone, Raspberry Pi 4 processor, GPS receiver, GPRS sender module, custom amplifier and power supply, programmed in Python

- Solution: Records 11025 samples per second, computes RMS and loudness in Phone as defined by the norm, but limited to <=1000Hz, transmits measurement + GPS data to remote server

- Performance: With hardly any noise from the power supply, the results are competitive to top level noise measurement hardware

- Contributions: 95% by Simon Weber (hardware design, implementation and programming of software)

If you would like to borrow the noise dosimeter for testing, please send me an e-mail.

Antidolby Loudness Balancer

2020, audio project. Automated leveling of feature films with high levels for action scenes/music. Often, modern feature films (e.g. Hollywood productions) use very unequal levels for dialogue and music/environmental sounds. In settings where the needs of third parties (sleeping children, neighbors, etc.) have to be respected, this can be very annoying. Hence, the Antidolby Loudness Balancer is able to learn remote control IR patterns, measures the sound pressure coming from your TV and – if necessary – reduces the level by sending the adequate commands.

Details

- Hard- & Software: Arduino Uno, a simple microphone, potentiometer and IR diode

- Solution: Records audio via the mic, computes the RMS and uses adaptive thresholding (twin comparison) for noise leveling

- Performance: All parameters can be controlled: min./maxs. level, reaction time, response time (minimum interval between an action and its inverse)

- Contributions: –

If you would like to make your own Antidolby, please send me an e-mail. I am happy to share the bill of material, software and 3D models of the case.

Bilderstutzen

2017-2020, multimedia project. Skilled hunting, no hurting. The Bilderstutzen is a device designed for old-fashioned hunting of animals, yet in contrast to a gun, animals are not shot at but just photographed. The shape of the Bilderstutzen has the typical dimensions and proportions of a hunting rifle. The trigger runs stiffly and has the typical characteristics of a gun lock. Aiming is done by a nothc and bead sighter. Once fired, the Bilderstutzen creates a sound that resembles an explosion and is loud enough to shy the target away. Technically, the system is based on an embedded computer as well as a self developed autofocus camera with zoom lens. Images of shots are processed through deep learning on the edge in order to identify (1) if an animal is present in the scene and, if yes, (2) if it would have been killed or wounded. In the latter case, a hash value is created that can be transfered via a mobile app to a server, where a trophy of the animal is 3D printed. If the Bilderstutzen is fired at human beeing (detected by the deep net), the Bilderstutzen locks the operating system and renders itself unusable. Hence, the Bilderstutzen gathers all the skills of the art of hunting without its negative aspects.

Details

- Hard- & Software: Welded steel frame, 20mm barrel, Raspberry Pi 4 for processing and CNN classification, zoom lens + step motor as autofocus, various sensors and actors, OpenCV and TensorFlow for image recording and image analysis + classification

- Solution: State transaction machine for gun control, convolutional neural net for object detection, transfer learning for the classification of the shot success (kill/wounded/missed)

- Performance: F1 score for the animal recognition of 92%, F1 score for the shot success estimation of 84%, both values are work in progress

- Contributions: –

If you would like to test the Bilderstutzen, please send me an e-mail.

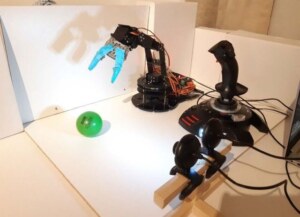

Robot Arm Sonification

Since 2018, multimedia project. Can you control a robot arm better if you cannot just see it but also hear the distance of the manipulated object? In this project, we tried to answer this question. Hence, a setup was developed that includes a state of the art training robot, a joystick and a stereo camera. Sonification is based on beep sounds describing distance from and orientation towards the object in question.

Details

- Hard- & Software: Raspberry Pi 4 processor, Joy.it training robot, two webcams for stereo vision, OpenCV, Python

- Performance: The answer to the research question is “yes”: Sonification helps both the learning process and keeps the accuracy in practical application higher than if just visual information was available.

- Contributions: 95% Günther Windsperger (hardware setup, implementation)

Noisometer

2012-2015, multimedia project. User your smartphone as a noise measurement device. Strictly speaking, the Noisometer app was not a hybrid system. Yet it combines smartphone hardware with psychoacoustic transformations and location-based services: Audio data is recorded over the microphone, filtered, processed and coefficients are computed. The resulting loudness values are forwarded to a server, aggregated and displayed on a map.

Details

- Hard- & Software: Any Android smartphone, implementation in Java

- Solution: The major issue is computing reliable noise values from different types of phone hardware. Achieving this goal required detailed testing and evaluation of filtering methods.

- Performance: The final prototype produced reliable measurements & could be used as a dosimeter for the long-term evaluation of environmental noise.

- Contributions: Alexander Schatten (project management), Thomas Teufel & Johannes Hartl (implementation)

Please note that the Noisometer cannot be found in the Play store anymore. If you are still interested in the app, please send me an e-mail.

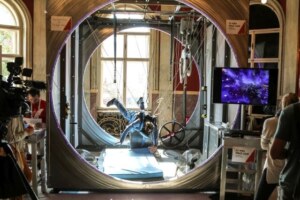

Virtual Jumpcube

Since 2014, mixed reality project. A unique physical-virtual skydiving experience. The Jumpcube enables humans to jump out of a virtual plane while performing a real physical jump. This combination creates a kick that is quite unique in mixed reality systems. Due to its popularity, the system was tested by more than 2700 people since 2015.

Details

- Hard- & Software: 1500kg of steel, 2 PCs with recent Nvidia graphics cards, HTC Vive, approx. ten Raspberry’s and Arduino’s each, wind machines, a water spray system, force simulation by servo motors, etc.

- Solution: Currently, we offer six different contents ranging from classical skydiving and skydiving is space to air racing against a plane & diving through the sewers of Vienna. All simulations include audiovisual, haptic and smell stimuli (via the Vragrancer system, see below).

- Contributions: Bela Eckermann (design, hardware implementation), Juri Berlanda, Luca Maestri, Tobias Froihofer (original content, controller setup, 3D models) & many other students.

- Sponsors: Waagner Biro Steel, Doka, European Society of Radiology, Schrack Seconet, BIG and many others more – thank oyu very much!

For a more detailed description of the project including images, videos & a list of events, please refer to the project website.

Vragrancer Smell Engine

2016-2018, mixed reality project. Enabling the sense of smell in VR applications. The Vragrancer is a system that allows the usage of up to eight different smells in mixed reality. It is based on the system ScentController by the German company Scent Communication. However, we had to develop our own controller and propagation system. Eventually, the smells are provided over a system of electric valves & tubes directly to the nose of the player.

Details

- Hard- & Software: ScentController.6, Raspberry Pi 3, Teflon tubes, electric 12V valves & a compressor

- Solution: The smell cartridges are also provided by Scent Communication and can be configures in almost any possible form. The palette includes, for example, the smell of a Diesel motor, coffee, ham & a meadow in spring.

- Performance: Scents are delivered with a latency of ca. 200ms over 5m from the Vragrancer to the HMD

- Contributions: Scent Communication, Cologne (hardware system, scents), Juri Berlanda (controller)

If you would like to borrow the Vragrancer for evaluation, please send me an e-mail.

Vreeclimber Wall

Since 2018, mixed reality project. Physio-virtual rock climbing through space. The Vreeclimber wall combines a climbing treadmill with virtual reality hardware that allows for virtually climbing through space while the physical wall enables endless climbing with varying grips and inclinations of the wall.

Details

- Hard- & Software: Our self-made climbing treadmill, recent VR hardware, up to eight HTC Vive trackers, game design and controller implementation in Unity 3D, several Raspberry’s and Arduino’s for various control applications.

- Solution: Vive trackers determine the position of the extremities of the climber as well as the current rotation and state of the climbing wall. The hand position is approximated from the nearness to the next climbing grip. State of the art climbing equipment guarantees that the climber is safe at all times.

- Discussion: The Vreeclimber system contains an add-on for wall climbing without VR head gear – the Vreeboulder system. This component provides an interactive game where a projector sets targets on the climbing wall that have to be reached by the climber in time. A sequence of levels with different wall speeds, wall inclinations and complexities of targets defines a simple yet entertaining 2D arcade game.

- Contributions: Onur Gürcay (game design), Ahmed Othman (controllers), and many other students

- Sponsors: the Doca company (construction material), the European Society of Radiology (funds), Boulderbar (two big boxes full of climbing shoes) – thank you very much!

For a more detailed description of the project including images, videos & a list of events, please refer to the project website.

Vreeboulder Projection System

Since 2020, mixed reality project. Gamefull mixed reality bouldering. The Vreeboulder Projection System employs the Vreeclimber wall for bouldering. User play old style arcade games while climbing on the rotating and tilting wall. Touch all targets before time runs out in oder to achieve a new high score.

Details

- Hard- & Software: Our self-made climbing treadmill, a self-made CNC control system for the BenQ projector, Python, OpenCV and the PyGame library.

- Solution: Computer vision methods are employed for position tracking of the gamer, the GRBL library is used for the positioning of the projector (we are not able to afford a bigger projector), and PyGame is used for game design and rendering of sprites. Based on the plattform, arbitrary 2D level games can be implemented.

- Discussion: Currently, since we do not have a proper mattress for bouldering (though the Vreeclimber was designed to be able to integrate one), we use the Vreeclimber rope system for securing the climbing person. However, all game elements are designed for bouldering applications and since no VR headgear is involved, could be directly switched to real bouldering.

- Contributions: –

- Sponsors: Many thanks to Hannes Kaufmann for donating a projector to the project!

If you would like to try the Vreeclimber of Vreeboulder system, please send me an e-mail.