Overview

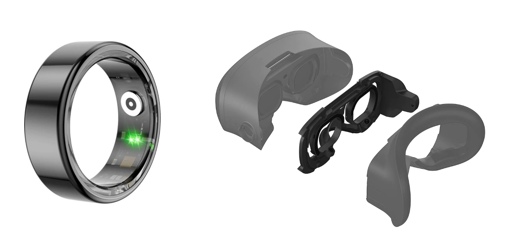

This project investigates an adaptive Virtual Reality (VR) system that integrates eye-tracking data from the HTC Vive Focus 3 (Eye Tracker add-on) with physiological signals from a smart ring (ex. COLMI R02) or a smart watch to support stress reduction and emotional self-regulation.

Users begin in a busy, noisy city VR environment and, to decrease stress and to stabilize focus, the world gradually transitions into restorative nature scenes, such as a calming forest or ocean with background sounds. Eye metrics (gaze fixation, pupil dilation, blinks) and biosignals (HR, HRV, SpO₂) are fused to estimate arousal. The smoother and calmer the signals, the faster and more positive the transition, creating an engaging form of biofeedback.

Tasks

– Course Project

- Integrate eye tracking (gaze, pupil size) from Vive Focus 3 via OpenXR.

- Stream biosignals (HR/HRV/SpO₂) from COLMI R02 via BLE.

- Simple fusion model with weighted rules

- Implement threshold-based transitions from city → (park) → nature.

- Small demo test with 2–3 users.

– Bachelor Thesis

- Develop a fusion model combining gaze and biosignals into an arousal index.

- Build a multi-stage environment: city → (park) → nature.

- Apply positive nudging: calming signals speed up transition.

- Run a pilot study (5–10 users) with questionnaires + HRV/pupil metrics.

– Master Thesis

- Create a robust multimodal framework with personalized baselines.

- Implement two conditions: forest vs. ocean transitions.

- Run a controlled study (larger participants), comparing biofeedback vs. no-biofeedback.

Required Skills

- Unity development with OpenXR.

- Eye-tracking data handling (gaze, pupil, blink).

- BLE integration for smart rings/watch.

- Signal fusion & real-time processing.

- Interest in VR design, affective computing, environmental psychology.

Contact

- Minkyung LEE lee@tuwien.ac.at